INTRODUCTION

Consciousness has intrigued humanity for centuries. It is only now with the emergence of complex systems science and systems biology that we are beginning to get a deeper understanding of consciousness. Here we introduce a computational framework for designing artificial consciousness and artificial intelligence with empathy. We hope this framework will allow us to better understand consciousness and design machines that are conscious and empathetic. We also hope our work will help shift the discussion from a fear of artificial intelligence towards designing machines that embed our values in them. Consciousness, intelligence and empathy would be worthy design goals that can be engineered in machines.

ARCHITECTURE FOR ARTIFICIAL INTELLIGENCE

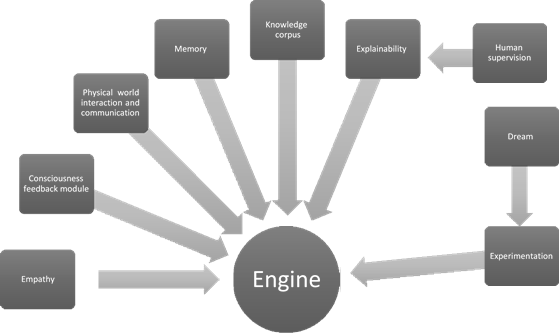

In this section we outline a computational architecture for intelligent machines that can be considered to be conscious and empathetic. The key components of this architecture are described below and shown in Figure 1. Each component is also described in detail in the subsequent sections.

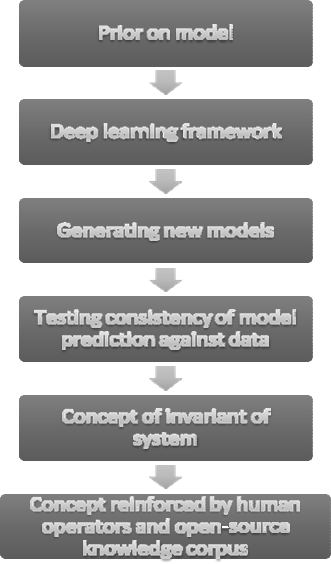

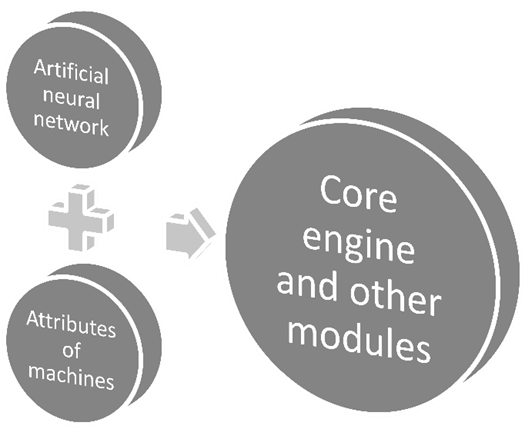

CORE COMPUTATIONAL ENGINE The core engine is a deep learning framework which is able to analyze data and formulate concepts. The different hidden layers of the neural network will represent concepts. For example, say the deep learning framework analyzes data from an oscillating pendulum. It will build an internal model representation of this data and discover an invariant like the period of oscillation (Figure 2)[1]. The deep learning framework will also analyze this data and form a concept of a period of a pendulum. This will also be reinforced by human input on what that concept actually means and by parsing the corresponding definition from an open-source knowledge corpus (like Wikipedia) (shown in Figure 2). We hypothesize that the more these machines connect these disparate sources of information and formulate concepts, the moreknowledge it will have and the more intelligent and conscious it will become. These machines will also collaborate with other machines and human operators to communicate and share concepts; together they will build on these concepts to generate additional insight and knowledge. Our hope is that humans and networks of machines can collaborate, leading to a collective amplified intelligence. INTELLIGENCE The machine will assimilate knowledge from supervised human input and an open source knowledge corpus (like Wikipedia). It will use a deep learning framework to analyze data and formulate concepts (the different hidden layers will represent concepts). It will thengenerate internal models, experiment with variations of these models, and formulate concepts to explain these (similar to a previous framework[1]). Let us again assume that the machine is given data on an oscillating pendulum. It will form an internal model and mutate that model (using genetic programming or Bayesian techniques) whilst ensuring the model predictions match the empirical data (Figure 2). It will then analyze this to find invariants (quantities that do not change) like the period of oscillation of a pendulum. Performing this in a deep learning framework, the hidden layers of the network will represent the concept of a period of oscillation. These concepts will be fed into the explainability module which will translate this concept to a human understandable format. Human operators will then reinforce this concept with dynamical systems). Operators can help generalize the concept of period of oscillation to other dynamical systems and help reinforce this concept (similar to how a teacher would teach this subject).

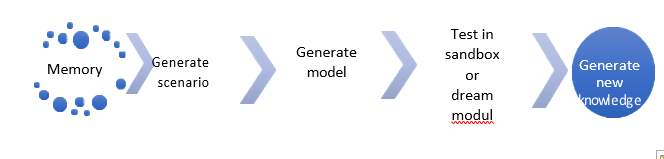

We note that this module can also be implemented in a Bayesian setting (Figure 2). The different models can be varied or mutated by using Markov Chain Monte Carlo in a non- parametric Bayesian model. Additional information can be provided from human operators using priors in a Bayesian model[2]. EMPATHY MODULE The empathy module would build and simulate a minimal mental model of others (robots or humans) so that they can be understood and empathized with. We note that this is a computationally expensive module (see Section Designing Empathy in Machines). This would require enforcing some constraints on how much time is spent on processing that information. CONSCIOUSNESS MODULE We define consciousness as what information processing feels like in a complex system (defined in detail in Section An Information Theoretic View of Artificial Consciousness). The consciousness module (Figure 4) is a deep learning network with feedback to analyze itself. The critical factor is that the module would feedback into itself. It would also need inputs from the intelligence module. We argue, like others before[3], that consciousness is what information processing feels like. Due to learning and human feedback, consciousness can also be learnt over time (bicameral theory of mind and consciousness[4]). We hypothesize that this proposed engineered system would build up consciousness over time. Communication with other robots and humans is also a critical component in order to build a collective intelligence. These machines will communicate with other artificially intelligent machines. Finally, this module will have agents (like in agent based models) combined with machine learning or reinforcement learning. Consciousness will be an emergent property of this system. DREAM MODULE The dream module is a sandbox environment to simulate all other modules with no inhibitions. This is similar to DeepDream[5][6] with feedback into itself. This module also has connections to the experiment module. EXPERIMENT MODULE The experiment module will play or experiment with systems in a protected sandbox environment (similar to another framework[1]). The input to this module would be data on a particular system of interest. The module would make a model, perturb this model, and observe how well is it consistent with data. The output of this module would be fed into a neural network to form concepts (the hidden layers of the deep learning network would represent concepts). EXPLAINABILITY MODULE This module will run interpretable machine learning algorithms to allow human operators to understand actions taken by the machine and get insights into mechanisms behind the learning. UNSUPERVISED LEARNING We propose that these systems should also incorporate some information from curated repositories like the Wikipedia knowledge corpus, similar to what was done for the IBM Watson project. SUPERVISED LEARNING The concepts formed by the engine and the output of other modules will be tested by humans. Humans will interact with the explainability module to understand why these particular actions were taken or concepts formed. Human operators would ensure that these machines have a broad goal or purpose and that all their actions are consistent with some ethical structure (like Asimov’s Laws of Robotics). Human operators will also try to minimize the harm to the machines themselves. We expect that different machines will form distinct personalities based on their system state, data and human input. This is an opportunity for us to personalize these machines (if used in homes). This step is also the most vulnerable; humans with malicious intent can embed undesirable values in machines and hence considerable care should be taken in supervising these machines. MEMORY Finally, the machine will have memory. It will record all current and previous states of its artificial neural network, learning representations and interactions with human operators. It will have the capability to play back these memories (in a protected sandbox environment), perform role-playing and simulate future moves. This will need to be done in the dream module. Our hope is that machines will be able to use past memories to learn from them, generate new future scenarios from past data and train on this data. These “reveries” will allow the machines to effectively use data on past actions to generate new knowledge. This reverie architecture generates new knowledge by combining stored information with a capability to process that information (Figure 3). In a certain sense, these machines will be able to learn from past mistakes and adapt to different scenarios. We hypothesize that this will lead to higher levels of intelligence and ultimately lead to a form of consciousness.

CONSCIOUSNESS, INTELLIGENCE AND LIFE: PERSPECTIVES FROM INFORMATION PROCESSING

We hypothesize that consciousness, intelligence and life are different forms of information processing in complex systems[7]. Information and the computational substrate needed to process that information serve as the basis of life, intelligence and consciousness. The minimal computational unit needed to create this artificial intelligence can be an artificial neuron, reaction diffusion computers[7][8] or neuromorphic computing systems[9]. Any of these computing substrates can be used to implement the architecture shown in Figure 1.

AN INFORMATION THEORETIC VIEW OF ARTIFICIAL CONSCIOUSNESS

Consciousness is characterized by feedback loops in a complex system. Consciousness is what information processing feels like when there are feedback loops in a complex system that processes information[1][3][10][11]. Consciousness also has been hypothesized to be an emergent property of a complex system[12]. It is like asking what makes water liquid; it is not only a property of the water molecule but also an emergent property of the entire system of billions of water molecules. Hence, we hypothesize that consciousness is also an emergent property of a complex information processing system with feedback. DESIGNING CONSCIOUSNESS IN MACHINES How can we encode these principles and design consciousness in a computer? A tentative basic definition of a conscious machine is a “A computing unit that can process information and has feedback into itself”. An architecture of a consciousness module is presented below and shown in Figure 4. Would a computer recognize that it is a computer? We can show a computer images of other computers to help it recognize itself (using deep learning based image recognition algorithms). We can also for example show the machine images of a smartphone, birds and buildings to reinforce the concept that it is not any of these things (non-self). Finally, we can design an algorithm to select out all images of non-self; all that remains is self. This kind of an algorithm can be used to design a sense of self in machines. Such a supervised learning approach is similar to negative selection in biology[13] where the immune system learns to discriminate between all cells in the body (self), versus all that is foreign and potentially pathogenic (non-self). A complementary approach is to exhaustively define all qualities that uniquely define self like the size of the computer, colour, amount of memory, identification marks, memory of past actions taken by this machine, etc. We can also show the computer an image of itself. All these attributes can then be coupled to a deep-learning based image recognition program or self- recognition program. The different hidden layers of a deep learning module would encapsulate the concept of self (based on images or other attributes). Both these strategies can be combined to design a basic level of self-awareness and consciousness in computers (Figure 4). We see some parallels to the turtle robots designed by William Grey Walter that could sense, move, and recharge[14]. These simple robots could follow a light source with a sensor, move around and then recharge its batteries when required. In one experiment, a light source was placed on top of the robot and the robot itself placed in front of a mirror. It was claimed that the robot began to twitch; W.G. Walter suggested that this robot showed a simple form of self-awareness[14]. We also argue that this is a basic level of consciousness and self-awareness. Similar principles can be used to design consciousness in machines. Finally, we note that there are some well-known cognitive architectures that can be used to implement this form of artificial intelligence[15].

HUMAN SUPERVISION AND INTERPRETABILITY Conscious machines will also need to explain themselves. The biological brain sometimes struggles to explain itself to others. Sometimes people find it hard to explain their actions or motives. Why do we love someone? Why do we feel afraid when we see a snake? The brain is a complex information processing system that does not lend itself very well to explanation. Some progress has been made in making artificial neural networks interpretable[16][17]. These are approaches where artificial neural networks are turned on themselves to analyze their own actions. Similar techniques can be used to implement an explainability module (Figure 1) that can explain specific actions taken by the machine to human operators. Making artificial intelligence interpretable or explainable will help human operators understand machines and help guide their training[18]. DESIGNING EMPATHY IN MACHINES Empathy is when we try to deeply understand another person. The brain is like a Turing machine, and empathy is similar to running another Turing machine within it. Simulating a Turing machine with another Turing machine and asking the question whether it will ever halt is undecidable (Halting problem). We hypothesize that in general empathy is undecidable. It is also computationally expensive, which is perhaps why biological organisms do not have a lot of empathy. Empathy is also intimately connected with a sense of self. Having a sense of self is essential for survival and maybe why evolutionarily it is important to have consciousness. There are people called synesthete who have a heightened sense of compassion for other people. They feel intense emotions and empathy for other people to the point where human interactions exhaust them and they can become homebound. Essentially they are simulating other people and feeling what other people are feeling. They also find it difficult to separate their own self from other people. Hence the reason we have a sense of self. We hypothesize that having a sense of self aids survival and delineates self from prey or predator. This may also be the reason we do not have a lot of empathy. If we did, we would not have a strong sense of self and may be at a selective disadvantage. Empathy and consciousness are also related. The ability to run a simulation of what another person is feeling like (simulating another person’s mental state) is empathy. Apart from being undecidable in general, empathy is also inversely related to a sense of self and hence maybe at a selective disadvantage. Evolution may have decided that a lot of empathy is not good for individual survival. However, we have the unique opportunity of being able to engineer machines that have more empathy than biological organisms. We suggest the possibility of programming empathy in a computer. We may have to impose limits on how much time to simulate another person or another machine’s state. In general this is undecidable, but we may be able to implement fast approximations. DISCUSSION We present a computational framework for engineering intelligence, empathy and consciousness in machines. This architecture can be implemented on any substrate that is capable of computing and information processing[7],[8],[19],[20][21][22][23][24][25][26][27][28][29][30][31][32][33][34]. We tentatively define consciousness as what information processing feels like in a complex system[3]. Consciousness is also like having a sense of self and is an emergent property of a complex information processing system with feedback. Our proposed architecture for intelligent, conscious and empathetic machines will assimilate knowledge using supervised learning, form concepts (using a deep learning framework) and experiment in a sandbox. Our proposed machines will be capable of a form of consciousness by using feedback. They will also be capable of empathy by simulating the artificial neural state or conditions of other machines or humans. We hypothesize that empathy and emotions have been pre-programmed over evolution. Empathy may confer an evolutionary advantage. We also recognize why too much empathy can be a disadvantage. Empathy can be achieved in robots through operator training (reinforcement learning) and allowing machines to analyze the artificial neural state of other machines or personal history of humans. We have the unique opportunity of being able to engineer machines that may have more empathy than biological organisms. Communication technologies and human supervision can help accelerate the onset of artificial consciousness as was hypothesized to have led to the emergence of consciousness in humans[4]. Consciousness can be learnt over time as has been hypothesized before[4]. We suggest a computational approach to engineer this in machines with close human supervision. Ultimately, we may be able to engineer higher levels of consciousness. More levels of feedback and more complexity in information processing may lead to higher levels of consciousness. The union of machine intelligence with our biological intelligence may also give us access to higher levels of consciousness. Computing paradigms that are not constrained by physical space or have different computing substrates (as proposed in different information processing systems[7][35] and in biology[20][21][22][23][24][25][26][27][28][29][30][31][32][33][34]) may be capable of higher levels of consciousness. Our greatest contribution as a species may be that we introduce non-biological consciousness into the Universe. We foresee a number of dangers. The scope of this computational framework (presented in Figure 1) is very broad and maybe currently be beyond the reach of individuals and only be feasible by giant corporations. Malicious corporations and conglomerates of individuals may misuse such an artificial intelligence, by for example failing to invest in empathy. It may be worthwhile to create non-profits that advocate for designing empathy in future intelligent machines and also educate the public about the potential benefits of such technologies. Another danger is that we mistreat these artificial creations. What ethics might we need to create for conscious machines[36]? Would it be ethical to turn off or destroy such an artificially intelligent and conscious being? The creation of artificially conscious and intelligent machines will challenge us to come up with new ethical structures. It may be the first time that consciousness would have been engineered rather than self-emerge and these beings would deserve as much sympathy as we show towards other species. We hope that this framework will allow us to better understand consciousness and design machines that are conscious and empathetic. We hope this will also shift the discussion from a fear of artificial intelligence towards designing machines that embed our cherished values in them. Consciousness, intelligence and empathy would be worthy design goals that can be engineered in machines.